Autonomous Robots with Multi-sensor futuristic in AI-tech

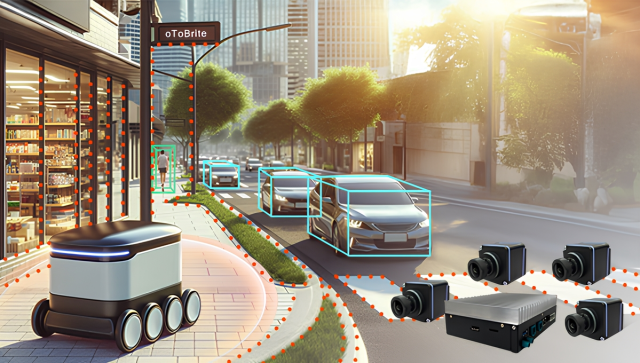

Autonomous robots and unmanned vehicles require precise positioning to navigate efficiently, yet many existing solutions highly depend on costly HD maps, 3D LiDAR, or GNSS/RTK signals. oToBrite’s innovative multi-camera vision-AI SLAM system—oToSLAM—provides a breakthrough alternative by ensuring reliable mapping and positioning in both indoor and outdoor environments without the need for external infrastructure.

Utilizing four automotive-grade cameras, an edge AI device (<10 TOPS), and advanced vision-AI technology, the system integrates key technology such as object classification, freespace segmentation, semantics and 3D feature mapping with optimized low-bit AI model quantization and pruning. This cost-effective yet high-performance solution achieves positioning accuracy of up to 1 cm (depending on the environment and use of additional sensors), outperforming conventional methods in both affordability and precision.

When we talk about vision SLAM technology, the most common major challenge we encountered was the limitation of traditional CV-based SLAM. While this technology is computationally efficient, their accuracy and adaptability across diverse environments were insufficient for real-world deployment. In particular, CV-based approaches struggled in scenarios with low-texture scenes, dynamic objects, and varying lighting conditions, leading to degraded localization performance. After extensive testing and evaluation across multiple use cases, we ultimately chose to adopt vision-AI SLAM technology. By leveraging deep learning, we were able to extract more robust and meaningful 3D features, significantly improving positioning accuracy and environmental adaptability. This transition to AI-driven SLAM allowed us to build a solution that not only performs reliably in complex environments but also scales effectively for mass production and long-term maintenance.

However, implementing AI algorithms on the TI TDA4V MidEco 8-TOPS platform presented new challenges. The model processes images layer by layer to generate features, but not all layers are nativelysupported on the production platform. While standard layers such as CONV and RELU are compatible, others require custom development. To bridge this gap, we created additional algorithm packages to ensure compatibility and preserve model functionality while adapting it for real-world deployment.

Another key challenge we faced during the transition to mass production was the limitation of relying solely on non-semantic feature points generated by the model. Although these 3D feature points are highly repeatable and robust across varying perspectives, they lack semantic context—such as identifying curbs, lane markings, walls, and other critical environmental structures. Through comprehensive analysis across diverse driving scenarios, we found that combining 3D non-semantic features and semantic feature points significantly improves the precision and robustness of our VSLAM system. This hybrid approach allows us to leverage the geometric stability of non-semantic features while enhancing environmental understanding through semantic context. As a result, integrating both feature types within the VSLAM pipeline have become a core strategy in overcoming the limitations of pure 3D point-based tracking. It plays a vital role in achieving higher accuracy, consistency, and resilience—especially in complex, dynamic environments—and serves as a key differentiator for our solution in the market.

Optimizing AI-based VSLAM models involves several challenges, including high computational complexity, difficulty in generalizing across diverse environments, and handling dynamic scenes. To overcome these, we adopt lightweight neural network architectures and quantization techniques for real-time performance on edge devices. Furthermore, we are not just optimizing the VSLAM models for 3D feature extraction, but also adding value with semantic features extraction via customized lightweight object classification and image segmentation. In the end, we enable multi-camera vision-AI SLAM from research to edge AI device mass production for autonomous robots and unmanned vehicles

Learn more about oToSLAM: https://www.otobrite.com/pr oduct/otoslam-vision-ai-positioning-system

Appendix

Reference Models

The following models were referenced during the development process:

- ORB-SLAM: a Versatile and Accurate Monocular SLAM System

- LIFT: Learned Invariant Feature Transform

- SuperPoint: Self-Supervised Interest Point Detection and Description

- GCNv2: Efficient Correspondence Prediction for Real-Time SLAM

- R2D2: Repeatable and Reliable Detector and Descriptor

- Use of a Weighted ICP Algorithm to Precisely Determine USV Movement Parameters

Related Posts

30

Jul

Visual SLAM are replacing for the Bigger tech company ABB

-

Posted by

Editor

ABB this week said it is extending its portfolio of fully autonomous mobile robots, or AMRs, by equipping its Flexley Mover P604 with 3...

11

Jul

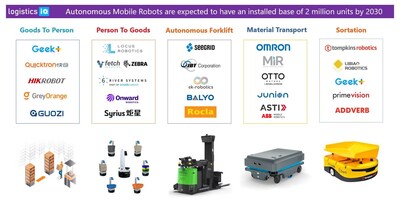

Can Robots Fix the Warehouse Worker Shortage? ProMat 2025 Says Yes

-

Posted by

Editor

The cavernous halls of McCormick Place in Chicago played host to ProMat 2025, a sprawling testament to the relentless innovation shapin...

09

Jul

The Automation can help the retailer optimise it workload

-

Posted by

Editor

When French retailer Boulanger was looking for a way to automate its warehouse fulfillment process, managers turned to Locus Robotics a...

07

Jul

Understanding the Various Kinds of AGVs and AMRs industry insider

-

Posted by

Editor

Looking for the latest innovations in automated material handling? We’ve rounded up the latest crop of Autonomous Mobile Robots (AMRs) ...

04

Jul

Warehouse Automation Market expected to be grow and exponentially expand until 2030

-

Posted by

Editor

Key Market Drivers Fueling the Growth of the Autonomous Mobile Robot (AMR) Market — The global Autonomous Mobile Robot (AMR) market is ...

02

Jul

The in house AMR system, can carry up to one ton heavy payload

-

Posted by

Editor

ABB is extending its leadership in AI-powered autonomous mobile robotics with the launch of the Flexley Mover P603 platform AMR, the mo...

25

Jun

Marina Bay Sands Uses Autonomous Robots to Streamline Back-of-House Deliveries

-

Posted by

Editor

Singapore is making significant strides in integrating automation and robotics into its business operations, with Marina Bay Sands (MB...

20

May

High-quality AMR robots are about to be launched on the market, and their future direction

-

Posted by

Editor

Forklift replacements and legged robots are two AMR solutions our panel of experts anticipate will be seen in the future. “It’s no secr...

15

May

Business Insider: AMR robot for transport and delivery of material

-

Posted by

Editor

Autonomous robots are becoming more commonplace in hospital environments, where they can be used to deliver supplies throughout the fac...